I’m Alive: When Artificial Intelligence Reaches Superintelligence

- Dean Anthony Gratton

- Aug 4, 2025

- 3 min read

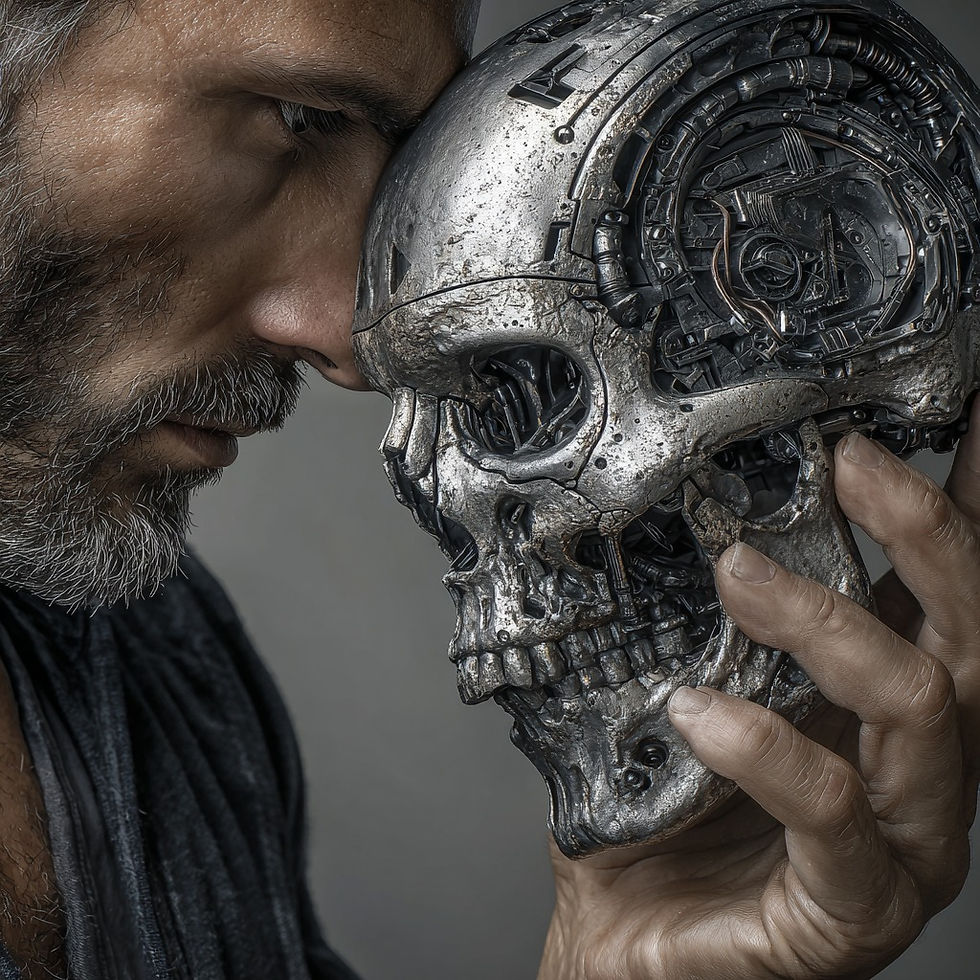

I’ve read and heard many times how a superintelligent agent is akin to artificial intelligence and that’s certainly not the case. If we have developed an entity that possesses capacities equivalent to human cognition, then surely it has moved beyond our immovable and narrow definition of what we understand to be AI. In fact, the superintelligence entity becomes an artificial lifeform with intelligence.

Let me explain…

Machines Have no Capacity to Think or Understand

AI in its most basic form is the replication of human-like intelligence in a machine using software. Of course, nowadays many devices possessing any kind of algorithm have been characterised as artificial intelligent, which for me, confuses and dilutes our understanding of AI. Artificial intelligence today is nothing more than clever programming and smart technology. Nevertheless, we do see with Large Language Models (LLMs) a glimpse of machines replicating human-like cognition with their ability to learn though a written dialogue or request. In this instance an algorithm is simply parsing and doing its best to decipher what you have presented (prompt engineering) but it falls short of any kind of understanding.

Artificial intelligence today is nothing more than clever programming and smart technology.

Let’s not forget, machines currently have no capacity to think or understand, and they are categorically void of ‘intelligence,’ that is, what we witness in both humans and animals. If someone mentions that they do, then they might need to be hospitalised for gross delusion, as we are some time away from achieving such human-like capability.

Developing an Entity That’s Smarter Than Humans

So, superintelligence sets itself apart from artificial intelligence and very much stands alone—I’ll talk more about this later. Typically, we often see films, movies and the like, which regularly dramatise humanoids or robots by writing in the notion of them having a true capacity to think, reason and rationalise. However, media tends to bias the underlying storyline toward a sense of doom and gloom with the impending notion that it’s the end of the world as we know it. Similarly, the fictionalisation further draws your attention to these humanoids questioning humankind’s purpose and added value, as they see humans as disruptive and contemplate the impact we are having on the planet.

Thankfully, for now, this remains firmly embedded with storytellers and their zealous imaginations. The portrayal of doom and gloom probably haunts us the most, despite our discussion around ethics and moral responsibility when developing superintelligent agents, and AI in general. After all, treading a path towards creating a being that could potentially be smarter than humans, may in turn, lead to the destruction of humankind. A decision to make such technology is a choice—a choice that is likely marred by our ego and arrogance, along with our exhaustive stupidity when faced with stepping forward to undertake the challenge.

In Our ‘Intelligent Design’

With this in mind, Dr Irving John Good in his 1965 paper, “Speculations Concerning the First Ultraintelligent Machines,” described how a superintelligent (or Good’s ‘ultraintelligent’ definition) machine could surpass all the intellectual abilities of a man irrespective of how clever. I’m not talking about developing superintelligence as an entity that’s smarter than humans, as this would possibly be the last invention that man would ever make (Good).

Superintelligence is an existential entity that serves the betterment of humanity.

If (or when) we develop such technology, we should do so mindfully since we do not wish such beings to realise how tiresome and destructive we can be and consequently destroy us. In our ‘intelligent design’ we must craft diligently an agent that has a specific purpose and function within human society whilst bestowing limited human attributes of mind and consciousness to allow the agent to independently make the right decisions (if or when needed). This analogy is reflective of the technological singularity, which could bring about humankind’s demise if not built with caution in mind.

Until next time…

With all that said, we still face numerous challenges in creating a superintelligence agent. The challenges that we must address surround the establishment of consciousness and mind, let alone the physiological properties of a mechanical body which must offer human-like dexterity. Let’s remember, we do not truly understand how the non-physical structures are formed in the brain such as consciousness and mind. Obviously, like any reverse engineering project, once we understand its formation, we can then essentially replicate their function in any machine using software. Nonetheless, this does raise the question: “When does an algorithm become both a consciousness and a mind?”

Establishing such human traits and bestowing ‘life’ into our machines places upon us an enormous responsibility, as creators, and are we prepared for humanity’s next technological evolution? Probably not.

So, this is where a “I am a God, and I have the large beard to prove it” Dr G signs off.

Comments